Achieving Energetic Superiority Through System-Level Quantum Circuit Simulation

2024-06-30 17:50

1160 浏览

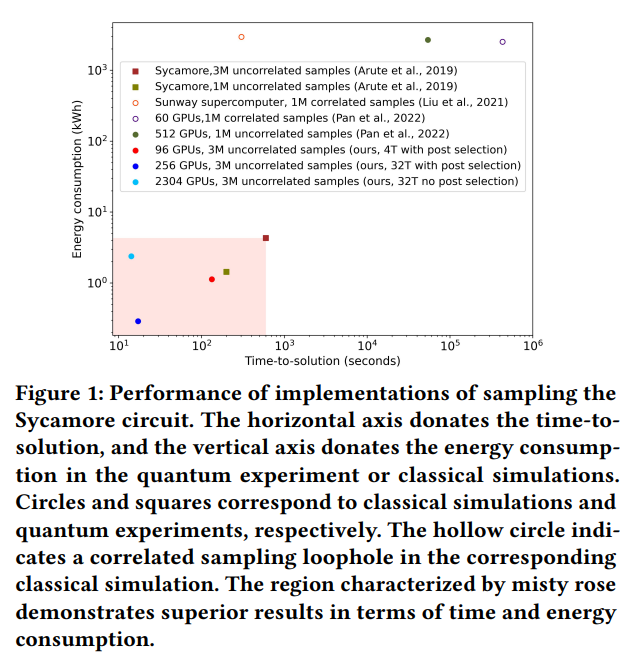

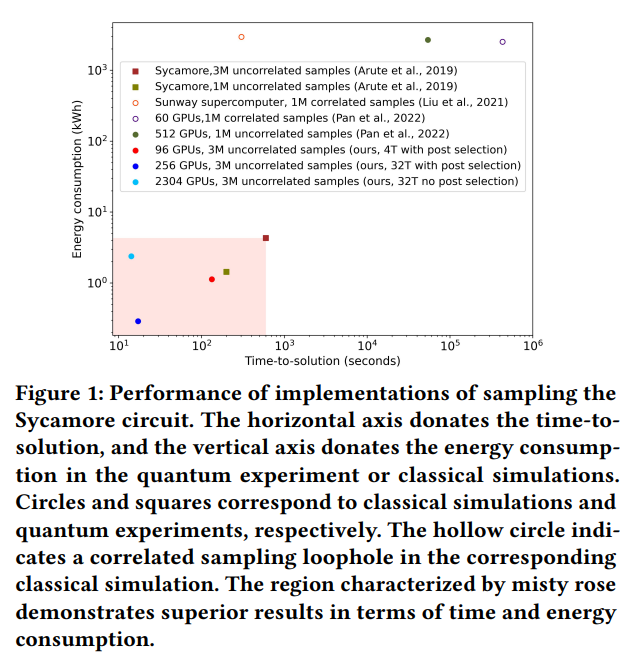

Quantum Computational Superiority boasts rapid computation and

high energy efficiency. Despite recent advances in classical algorithms aimed at refuting the milestone claim of Google’s sycamore,

challenges remain in generating uncorrelated samples of random

quantum circuits.

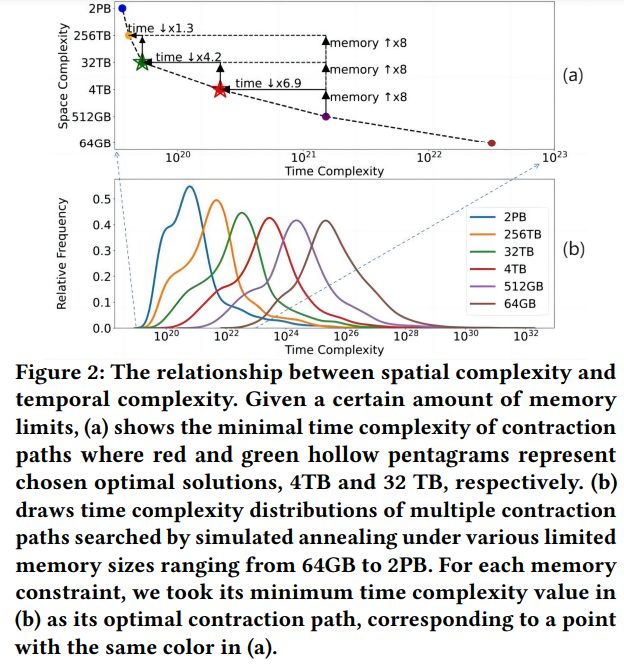

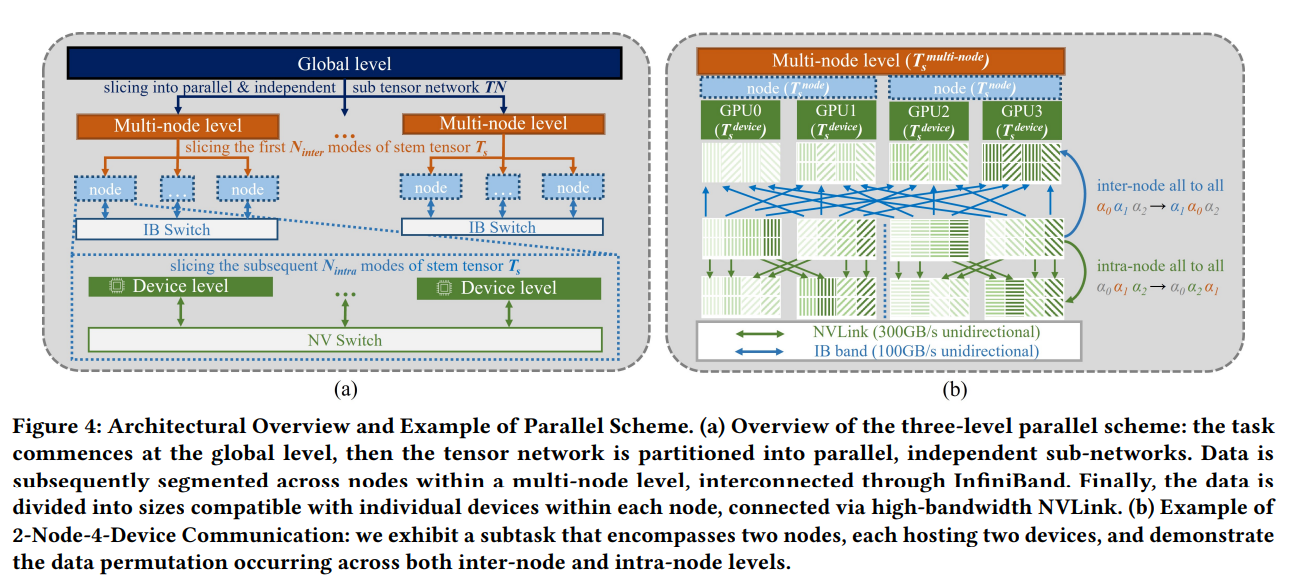

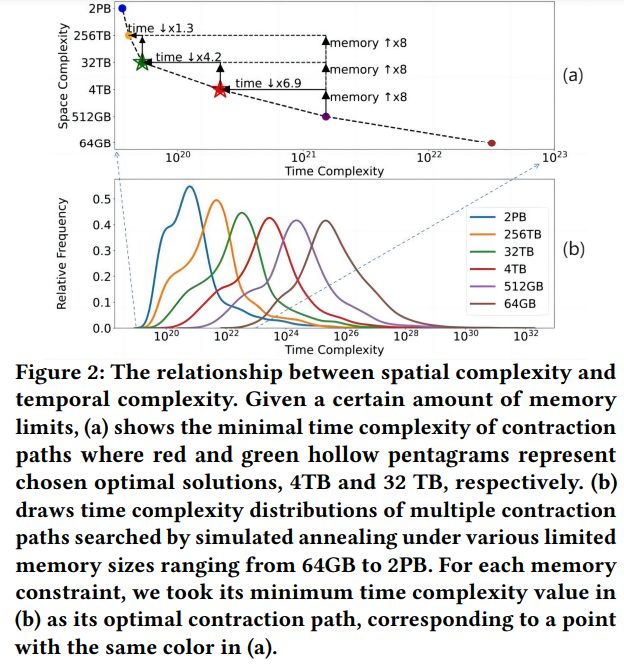

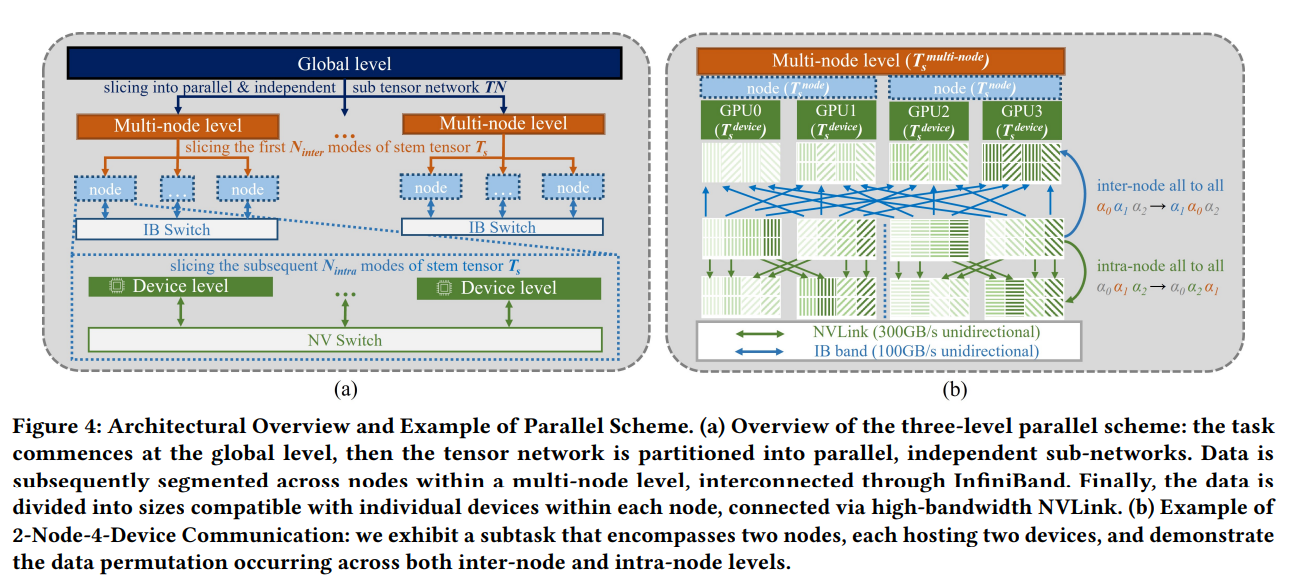

In this paper, we present a groundbreaking large-scale system

technology that leverages optimization on global, node, and device

levels to achieve unprecedented scalability for tensor networks.

This enables the handling of large-scale tensor networks with memory capacities reaching tens of terabytes, surpassing memory space

constraints on a single node. Our techniques enable accommodating large-scale tensor networks with up to tens of terabytes of

memory, reaching up to 2304 GPUs with a peak computing power

of 561 PFLOPS half-precision. Notably, we have achieved a timeto-solution of 14.22 seconds with energy consumption of 2.39 kWh

which achieved fidelity of 0.002 and our most remarkable result is a

time-to-solution of 17.18 seconds, with energy consumption of only

0.29 kWh which achieved a XEB of 0.002 after post-processing, outperforming Google’s quantum processor Sycamore in both speed

and energy efficiency, which recorded 600 seconds and 4.3 kWh,

respectively.

Article:https://arxiv.org/abs/2407.00769